Making robots smarter is a challenge. Teaching them to think and learn like humans is even harder.

Amisha Bhaskar, a fourth-year computer science doctoral student at the University of Maryland, is focused on this line of work, combining fresh ideas and novel technology to make robots more adaptable, efficient and reliable in complex, real-world environments.

Bhaskar recently presented her work at the Amazon Robotics Fall Research Symposium & Ph.D. Communication Competition in Boston, an event that highlights standout Ph.D. research and fosters knowledge exchange with Amazon scientists, engineers, and leadership.

Bhaskar was one of only 10 Ph.D. students nationwide to make the finals in the competition, where she presented the bulk of her Ph.D. thesis via a five-slide talk focusing on four areas critical for deployable robots: long-term planning, adaptability, data efficiency, and multimodal sensing.

While she didn’t win the competition, Bhaskar says she found the event very useful in that it was more than just a test of one’s technical skills.

“It was rewarding to condense years of research into a crisp story for a broader audience,” she says. “Deciding what technical detail to leave out without oversimplifying was the biggest challenge.”

Bhaskar’s presentation highlighted four of her research thrusts:

—“Long-horizon Visual Action based Food Acquisition” (LAVA), which demonstrates planning in robotic-assisted feeding for people with mobility impairments.

— “PLANRL: A Motion Planning and Imitation Learning Framework to Bootstrap Reinforcement Learning,” which combines classical motion planning with reinforcement learning for improved robot adaptability in unfamiliar environments.

—"Sketch-to-Skill,” which allows robots to learn complex tasks from simple hand-drawn sketches, enhancing sample efficiency.

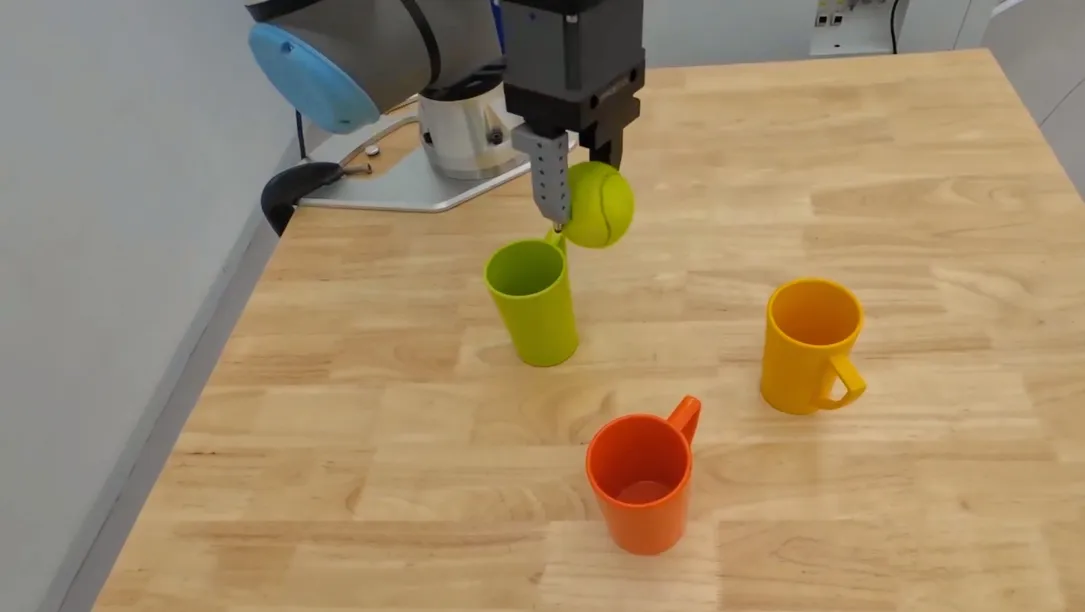

—A project that teaches robots to imitate complex actions using multiple sensory inputs, including vision, touch and motion, in real time.

Bhaskar notes that the fourth project listed has been submitted to the upcoming 2026 International Conference on Learning Representations in Rio de Janeiro, Brazil, and that she filed a patent on the work during her recent summer internship at Mitsubishi Electric Research Laboratory.

Pratap Tokekar, an associate professor of computer science and Bhaskar’s academic adviser, praised her ongoing body of work.

“Her research focuses on making robot learning both more purposeful and more intuitive,” says Tokekar, who has an appointment in the University of Maryland Institute for Advanced Computer Studies. “What I find particularly noteworthy is her focus on helping robots understand when to learn and how to learn efficiently from compact human input, rather than just learning for learning’s sake.”

—Story by Melissa Brachfeld, UMIACS communications group