We’ve all seen a basketball coach drawing a play on a whiteboard—arrows, boxes and circles representing a choreographed motion that, if executed just right, sinks the game-winning shot. Now picture using this same approach to teach a robot to perform complex tasks in the real world.

That’s the promise of Sketch-to-Skill, a novel robot learning framework developed by researchers at the University of Maryland. Instead of relying on time-consuming and often expensive demonstrations or tedious remote-control training, robots learn from simple hand drawings—turning a time-honored method of human communication into practical robotic behavior.

The UMD team will present its work at the Robotics: Science and Systems conference held June 21–25 at the University of Southern California.

Amisha Bhaskar, a third-year computer science doctoral student and a lead author of the study describing the framework, says her team’s goal is to make robot training less of a specialized technical skill, helping to make such autonomous systems more practical for use throughout society.

“Most existing methods rely on carefully guided demonstrations or teleoperation, which can be hard to scale,” Bhaskar says. “But we noticed people are good at expressing intent with simple sketches. So, we started wondering: What if you could just draw a rough idea of what should happen, from a couple of angles, and let the robot figure out the rest?”

That idea resonated with Peihong Yu, a sixth-year computer science doctoral student, who was already studying similar concepts in multi-agent settings, which involve multiple autonomous “mini-computers” interacting to achieve a common goal.

Yu, another lead author of the study, and Bhaskar together realized that sketch-based trajectories might be especially helpful for manipulation tasks with a robotic arm.

Bhaskar already had experienced the monotony of conducting high-quality demonstrations for the robotic arm system to view and learn from. This included time spent on setting up objects to grasp and writing code for the robot’s AI-based neural network to interpret those objects and decide upon a course of action.

Including first-year computer science doctoral student Anukriti Singh; Zahiruddin Mahammad M.Eng. ‘25 and Pratap Tokekar, an associate professor of computer science with an appointment in the University of Maryland Institute for Advanced Computer Studies, the team created the system to translates rough sketches into 3D trajectories a robot can use to perform physical tasks.

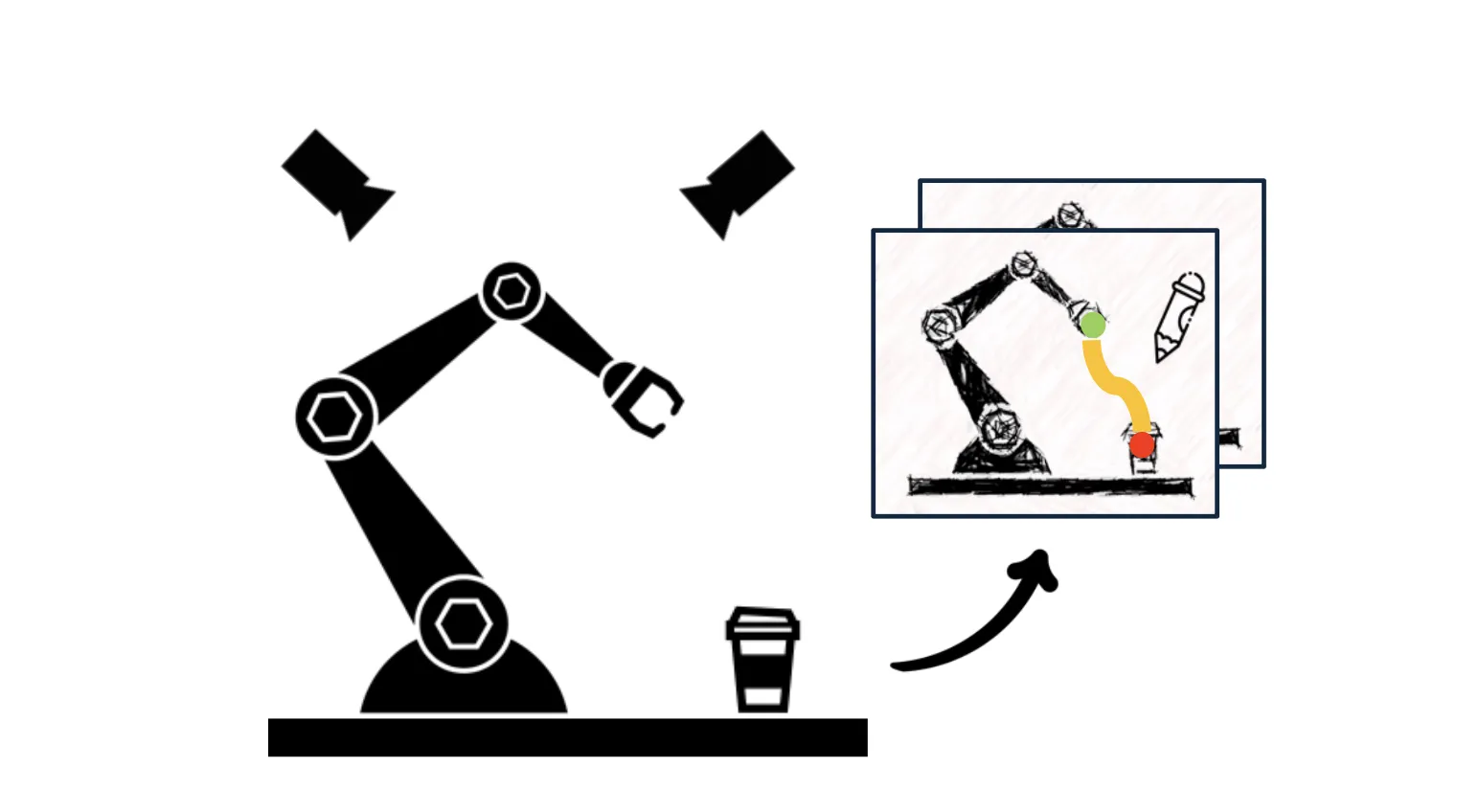

Here’s how it works: A user creates two motion sketches of the proposed trajectory of the robot’s actions using just lines and curves on RGB images, which are standard color images composed of red, green and blue channels, from different viewpoints. A neural network converts the pair of 2D inputs into a full 3D trajectory, which the robot uses to execute the task. It performs the motion using open-loop control—where the control action is independent of the output, meaning the system doesn't receive real-time feedback—and gathers data to refine future performance.

In simulated environments, the approach achieved 96% of the performance of expert demonstration-based models and outperformed pure reinforcement learning methods by 170%. In physical experiments using a UR3e robot—a lightweight, collaborative robotic arm designed for precision tasks like assembly and pick-and-place—the system succeeded in 80–90% of tasks, such as pressing buttons, moving toast and picking up objects, without any need for teleoperation.

The approach can lower the barrier to teaching robots, especially in real-world settings without robotics specialists ready at hand, and where teleoperation is not practical.

The team is now exploring how to adapt the framework to more complex tasks by incorporating user feedback and opening it up to other simple teaching styles. They’re also investigating how to combine visual input with language and video for richer communication between people and machines.

“I see this as a step toward more accessible and flexible human-robot interaction,” Yu says. “We’re excited about enabling easier, more natural ways for people—especially nonexperts—to instruct robots, and we hope to build on this work with more interactive and multimodal capabilities in the future.”

—Story by Melissa Brachfeld, UMIACS communications group