Many robotic systems rely on sophisticated cameras and sensors to “see” their surroundings. Humans do this naturally; babies and toddlers learn to judge size and distance by comparing objects to their own bodies. But robots lack this ability, with most needing precise measurements and calibrations to understand their environment.

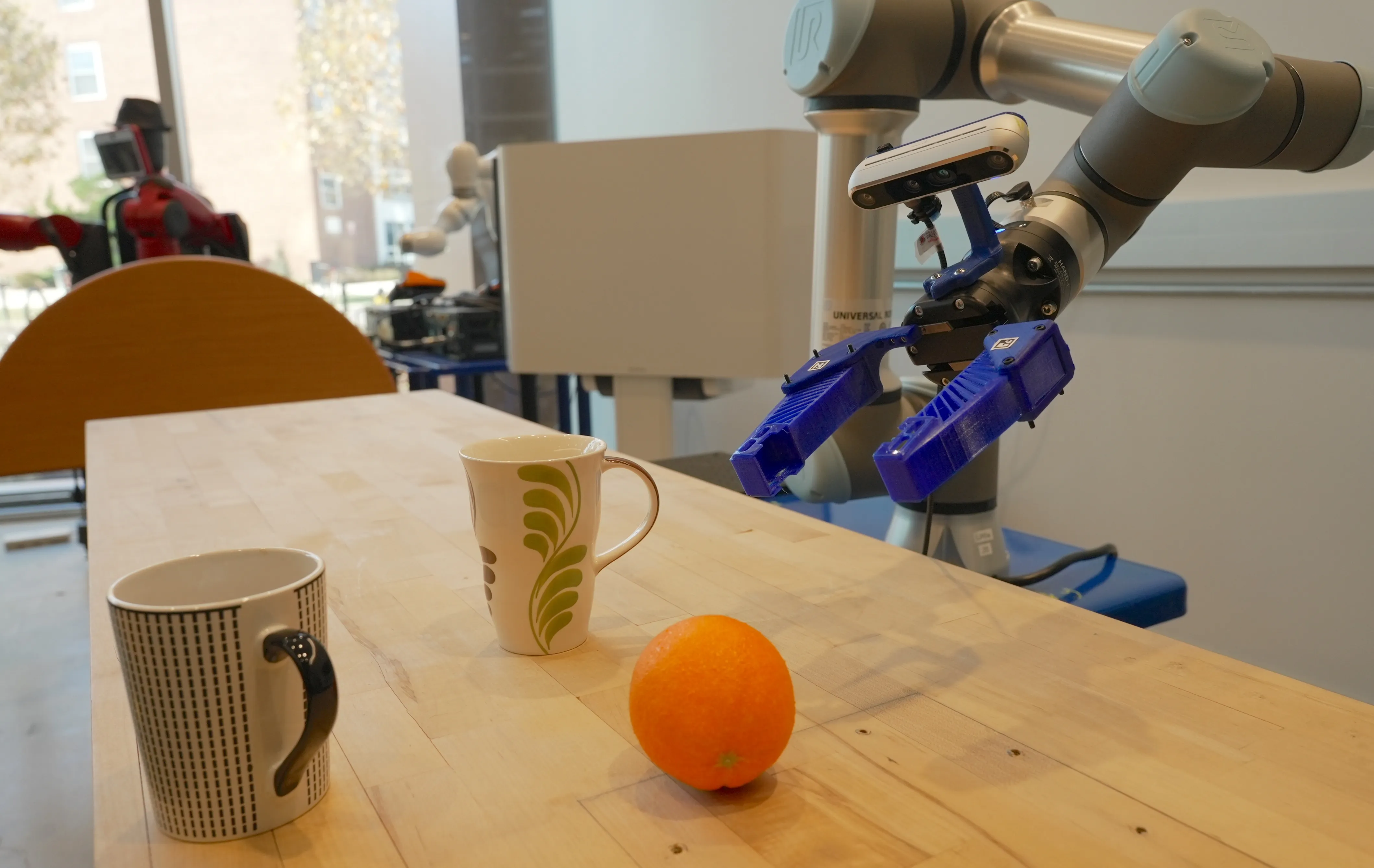

Seeking a solution to this problem, University of Maryland researchers are developing robotic sensing abilities that mimic biological systems, which ultimately could allow robots to navigate and manipulate objects without pre-programmed measurements.

Their work was recently published in npj Robotics, demonstrating how robots can “learn” from their own actions like pushing, jumping, or reaching to better understand the world around them.

This method mirrors how insects or small rodents sense their surroundings, says Levi Burner, lead author of the paper who successfully defended his Ph.D. thesis on this topic in September.

Burner, who earned his degree in electrical and computer engineering, says that he was initially driven to look for biologically inspired solutions by watching his dog, noticing how she naturally judged distances, as opposed to the arduous—and often expensive process—of continuously telling a robot what a meter is, what an inch is, what a foot is.

At some point, Burner explains, you become frustrated after realizing “Why am I teaching this robot what a meter is so that it can do basic things like stop in front of a door, or reach out its hand and try to pick something up?”

Burner would rely heavily on his academic advisers—Yiannis Aloimonos and Cornelia Fermüller—in coming up with a workable solution.

Fermüller’s background in neuromorphic computing helped shape the team’s approach, and her skills in refining and communicating the project’s concepts were imperative for refining text on the published paper, Burner says.

Fermüller, a research scientist in the University of Maryland Institute for Advanced Computer Studies (UMIACS), says that although tremendous steps have been made in artificial intelligence systems used to help robots navigate, there is still a lot of inefficiency in play.

“By taking inspiration from biology, which uses different representations to reach its goals, we can close this gap and solve tasks much more efficiently. We need to rethink pouring vast resources into training so-called end-to-end AI systems for every individual task,” she says.

Aloimonos, a professor of computer science with an appointment in UMIACS, spoke highly of Burner’s Ph.D. work that led to the npj Robotics paper.

"Levi’s thesis brings a breath of fresh air within the cacophony of current approaches to robotics and AI, because it takes us one step closer to achieving embodied robotics,” Aloimonos says. "It is an analysis of visuo-motor control in active perception agents.”

Before publishing their paper, Burner tested the team’s theory by having a robot determine on its own if it could fit through an opening using feedback from its own movements. In another test, a robot learned to jump across gaps by repeatedly moving back and forth, building an internal sense of gravity and distance.

“The most difficult part of any work like this is figuring out experiments that can further your idea, especially for a concept that has so many potential applications,” he says. “For example, what if all the self-driving cars in the world didn’t need to know what a meter is? That's a huge challenge that I can't solve as a graduate student in five years.”

But Burner, who now works as a postdoctoral associate in the Intelligent Sensing Lab led by Chris Metzler and the Perception and Robotics Group led by Aloimonos and Fermüller, does see his team’s work as a paradigm shift for robotics. His hope is that this work will influence future robotics developers.

“I want to communicate and convince other robotics experts that this is a good way to build their systems,” he says. “I want to tell them, ‘No, don’t do that. We could do this instead.’ That’s my goal.”

—Story by Zsana Hoskins, UMIACS communications group