The dream of creating game-changing quantum computers—supermachines that encode information in single atoms rather than conventional bits—has been hampered by the formidable challenge known as quantum error correction.

In a paper recently published in Nature, researchers from Harvard University, the University of Maryland and other institutions demonstrated a new system capable of detecting and removing errors below a key performance threshold, potentially providing a workable solution to the problem.

“For the first time, we combined all essential elements for a scalable, error-corrected quantum computation in an integrated architecture,” said Mikhail Lukin, co-director of the Quantum Science and Engineering Initiative, Joshua and Beth Friedman University Professor, and senior author of the new paper. “These experiments—by several measures the most advanced that have been done on any quantum platform to date—create the scientific foundation for practical large-scale quantum computation.”

In their paper, the team demonstrated a “fault tolerant” system using 448 atomic quantum bits manipulated with an intricate sequence of techniques to detect and correct errors.

The key mechanisms include physical entanglement, logical entanglement, logical magic, and entropy removal. For example, the system employs the trick of “quantum teleportation”—transferring the quantum state of one particle to another elsewhere without physical contact.

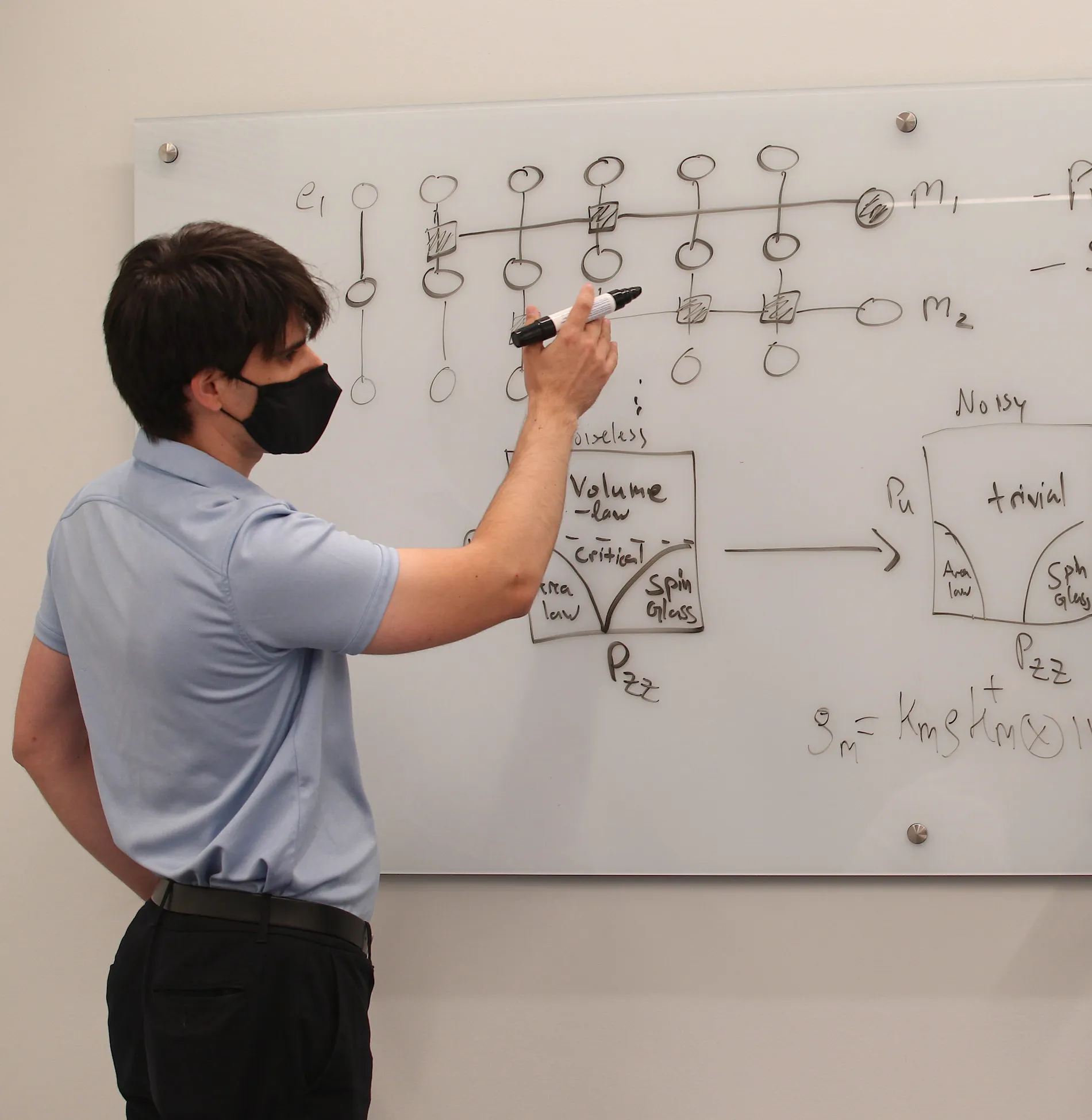

Michael Gullans (in photo), a physicist at the National Institute for Standards and Technology and Fellow in the Joint Center for Quantum Information and Computer Science (QuICS), was a co-author of the study.

Go here to read the full story published in The Harvard Gazette.