Nonverbal communication in the workplace—like a nod, hand gesture, or glance—is crucial for building trust and collaboration among co-workers. But for blind individuals, these subtle cues often go unnoticed, creating barriers to effective teamwork.

Research underway at the University of Maryland and Cornell University could soon change that, with researchers from both institutions developing an embodied (wearable) AI system that can interpret and convey nonverbal signals in a way that strengthens communication and trust between blind and sighted individuals.

The project—supported by two seed grants from the Institute for Trustworthy AI in Law & Society (TRAILS) totaling almost $230,000 in indirect costs—is still in the prototype stage, but the researchers hope to take the technology from their lab space and begin testing it in professional workplace settings within the next 18 months.

“Sight-impaired people continue to play an active role in today’s workforce across sectors and occupations, yet little remains known regarding best practices that can improve their non-verbal interactions with their sighted colleagues,” says Ge Gao, an assistant professor in UMD’s College of Information who is a co-PI on the project.

Gao gives the example of a sighted individual discussing a task with a blind colleague, with the sighted person instinctively turning their head and gazing directly at their work partner, seeking acknowledgement of the idea they are talking about. Unaware of this silent interaction, the blind worker is unresponsive, which can ultimately lead to tension between the co-workers.

The AI-infused technology under development at UMD and Cornell will combine tactile (touch perceived), haptic (motion or vibration initiated) and audio feedback to convey non-verbal information in ways that feel natural and unobtrusive.

The devices—some will be wearable, and others may be mounted on a desktop—might gently vibrate to signal a teammate’s nod or gesture or provide brief audio cues describing important nonverbal actions. AI algorithms will be used to interpret these signals—ranging from vision-based systems that detect gestures and body orientation, to sensor-driven models that track motion and posture.

The TRAILS project is an extension of previous work done by Gao, Huaishu Peng, an assistant professor of computer science at UMD, and Jiasheng Li, a fourth-year computer science doctoral student at UMD advised by Peng.

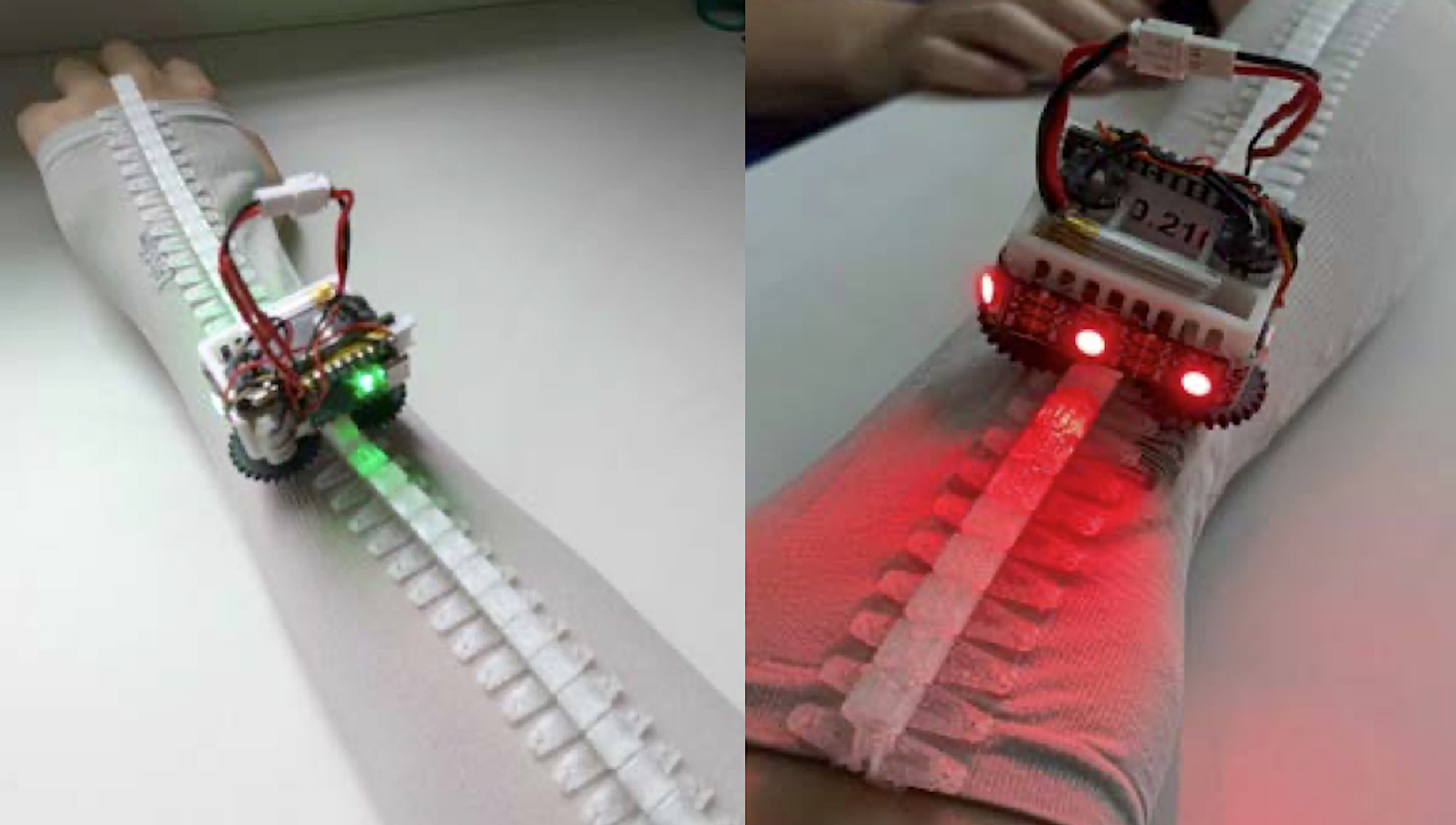

That project, called Calico, involved a miniature wearable robotic system that moved along the human body on a cloth track, using magnets and sensors to position itself for optimal sensing for various physical activities.

Peng says that TRAILS seed funding has allowed the UMD researchers to expand the Calico project and collaborate with Malte Jung, an associate professor of information science at Cornell University, whom Peng and Gao both knew from their time as Ph.D. students on the Ithaca, New York campus.

“Malte excels in the design and behavioral aspects of human-robot interaction in group and team settings, and that complements our own work in assistive and enabling technology,” says Peng, who in addition to his tenure track appointment in computer science has an appointment in the University of Maryland Institute for Advanced Computer Studies (UMIACS).

Gao also has an appointment in UMIACS, with the institute providing administrative and technical support for many of the TRAILS seed fund projects.

In their work thus far, the TRAILS team has surveyed more than 100 blind and sighted participants about how they interpret nonverbal cues in workplace settings, partnering with the National Federation of the Blind (NFB) to broaden their outreach. They are also conducting co-design sessions with both blind and sighted people to directly engage them in shaping AI tools that foster better collaboration.

Peng recently received a 2025 Accessibility Inclusion Fellowship from the NFB—a prestigious, year-long award granted to just three professors in the state of Maryland annually. The fellowship supports recipients in integrating accessibility principles into their curriculum and incorporating nonvisual teaching methods into at least one of their courses.

Much of the logistical work for the TRAILS project is handled by graduate student Li, who has taken charge of designing multiple online surveys, reviewed scores of academic papers related to his team’s work, took a lead role in recruiting participants for the project, and is running several workshops.

“Creating technology that truly serves everyone requires listening deeply and designing thoughtfully,” Li says. “I’m driven by the chance to make AI accessible, inclusive, and empowering for all users—especially those often left out of the conversation.

Li’s dedication to creating accessible technology recently earned him the prestigious Phi Delta Gamma Graduate Fellowship from UMD’s Graduate School—the first computer science student to receive the honor in the fellowship’s 20-year history. The award recognizes his interdisciplinary scholarship and supports his continued work on inclusive AI design.

Ultimately, the TRAILS team expects that their work may lead to additional research funding, while also yielding multimodal datasets and important research publications targeting fields such as human-computer interaction, human-robot interaction, and human-centered AI—all areas of scientific discovery that can benefit future efforts working with blind/sighted teams.

“It’s not just building the technology, it’s also learning from the people involved,” Gao says. “This work will facilitate input from both sides of these interactions, valuing the needs of blind individuals while also addressing their sighted partner’s input.”

—Story by Melissa Brachfeld, UMIACS communications group