Facilities and Infrastructure

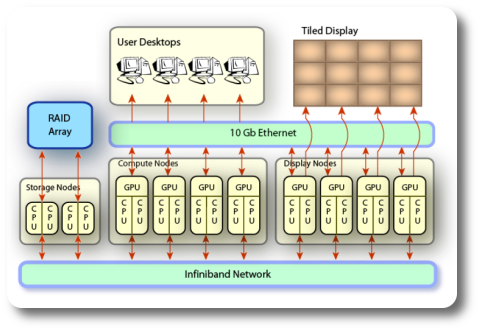

We have built a high-performance computing and visualization cluster that takes advantage of the synergies afforded by coupling central processing units (CPUs), graphics processing units (GPUs), displays, and storage.

Hardware

CPU-GPU Cluster Infrastructure

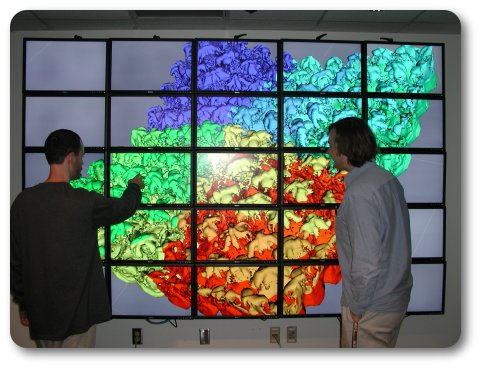

- Tiled display wall of 25 Dell 24-inch 1920 x 1200 LCDs

- 15 CPU/GPU display nodes

- 12 CPU/GPU compute nodes

- 1 CPU/GPU direct interaction node

- 3 storage nodes with 10 TB of storage

- 1 scheduler node

- InfiniBand and traditional 10 Gb Ethernet communication links between nodes

- dual Intel Xeon 3GHz CPUs

- 8GB of RAM

- 100 GB disk

- 512MB NVIDIA GeForce 7800 GTX GPU (display nodes)

- 256MB NVIDIA GeForce 6800 Ultra GPU (other nodes)

Software

We have installed several software packages on the pilot cluster. In anticipation of the larger cluster, our work on the operating system has focused on developing an automated installation of the customized components and automated mechanisms for updating them across a much larger cluster. We have configured and tested a number of software packages including: BrookGPU, NVIDIA Cg, the NVIDIA SDK, and the NVIDIA Scene Graph SDK, GNU Compiler Collection, NAGware FORTRAN Compiler, the Fastest Fourier Transform in the West (FFTW), and Matlab. We have integrated our CPU-GPU cluster with our standard resource allocation mechanisms: the Portable Batch System, the Maui Scheduler, and Condor. Users can request immediate allocations for batch or interactive jobs or they can reserve nodes in advance of a demo. Unused nodes are donated to the condor pool and may be used by any researcher in the Institute. We have experimented with several software packages to distribute rendering of the tiled display, including Chromium, DMX and OpenSG. For scientific visualization tasks, we are utilizing Paraview and custom applications built with Kitware's Visualization ToolKit (VTK).

We have deployed an infrastructure for interacting with the cluster using standard desktops, cluster-connected workstations, and large-scale display technologies. We have installed the cluster nodes and cluster-connected workstations in a secure data center and their displays and terminals in the graphics lab and a neighboring public lab. We are using digital KVM as a practical technology for interacting with the CPU-GPU nodes from a data center console or for remote IP clients. Although this technology does not support high resolution video or suitable refresh rates, it is invaluable for troubleshooting and managing the nodes. We are also evaluating tools to provide software-based remote display access based on VNC and the HP Remote Workstation Solutions. These solutions allow remote users to monitor the cluster displays at reduced frame rates over local area networks. This is a key tool for developers, who may wish to work from their own desk while interacting with the cluster.