Click here for a two-page summary of VTOY performance as of August 2000

Eye contact, face expressions and actions play an important role in

human communication. In addition to conveying emotions they are employed

to augment and control the flow of human interactions. Toys are currently

visually blind. They cannot recognize the presence of humans, identify

their communicative messages, or react to these messages. Animators

have long recognized the value of facial expressions in making actors convey

more realism. Moreover, they often employ exaggerated animations

(cartoon-like animations) to increase the intensity of the viewer's involvement.

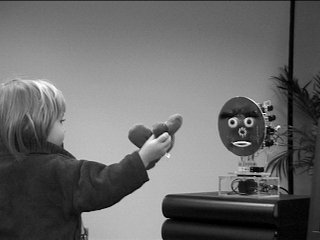

The V-TOY robot, PONG, has a total of 12 servos resulting in 11 1/2 degrees of freedom.. The eyes constructed from ping-pong ball each have two degrees, azimuth and elevation, of rotational freedom. This enables PONG to establish eye contact with people in the camera's field of view and track them as they move. The eye browses each have one degree of freedom that corresponds to the corrugators supercilii and medial frontalis muscles. This allows PONG to raise and lower his eye brows. The mouth is controlled by 4 servos. The first two enable the left and right mouth corners to move up and down. The second two enable the upper and lower middle lip to move up and down enabling the mouth to be opened and closed. Finally the neck has two servos controlling the pan and tilt position of the head. PONG is interfaced using an RS232 port driven from a Java application that allows control of all 12 servos. The video camera is imbedded in the position of the nose.V-TOY is a prototype of a future toy having the following capabilities:

- Detection of the presence of humans, their gaze and facial actions.

- Controlled neck and eye movements and generation of facial deformations such as mouth and eyebrows deformations.

- Speaker localization and speech recognition for verbal commands

- A small set of behaviors designed to attract the attention of a human.

|

|

|

Face expression recognition

|

Gaze recognition and face expression generation

|